ChatGPT — this leap forward in AI changes everything

It’s the biggest leap forward in artificial intelligence ever. But is the Church ready for auto-generated sermons, prayers and worship songs?

Have you come across a sermon written by Artificial Intelligence (AI)? Or read an AI generated prayer or “thought for the day”? Bizarre as it may sound, all of these are now possible. Unlike the time-consuming human versions, AI sermons appear in seconds — and they’re not bad! Just as in the industrial revolution when factories and machines replaced muscle-power, AI is replacing brain-power, and the magnitude of these developments may well eclipse those witnessed in 18th century England.

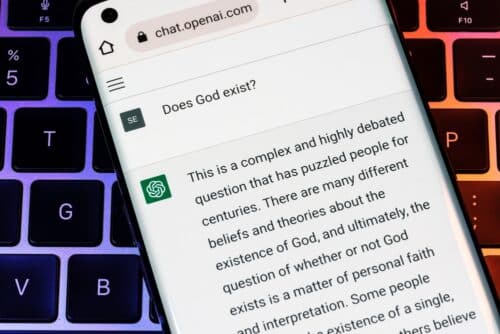

The freely available AI tool ChatGPT was launched on 30 November 2022. Five days later, it had 1 million registered users. Today the figure is 100 million. Not surprisingly, the world’s media have been paying attention.

“An AI just passed a university exam (but don’t worry it was only Economics!)” Financial Times

“‘So, could ChatGPT do your job better than you?” Evening Standard

“Teachers’ concern that OpenAI’s ChatGPT is ‘so powerful’ it could end homework” Daily Mail

“Rabbi uses ChatGPT to write sermon” Christian Post

What can ChatGPT do?

The easiest way to understand this is to see an example. I asked ChatGPT: “Write a short sermon for a Christian audience on ‘encouraging one another’ based on Hebrews 10:23–35. Include practical tips for encouragement”.

Within 3 to 4 seconds scrolling text began to appear:

“Beloved brothers and sisters in Christ, I come before you today to discuss…”

OK, that’s definitely not my style! So I typed: “Try again using informal, snappy language”.

Again, almost immediately, it responded:

“Life can be tough and it’s not always easy to remain strong in our faith when we’re going through hard times. That’s why having the support of others is so important—not just spiritually, but emotionally too. We all need a little encouragement to get us through the tough times, and it doesn’t have to come in the form of grand gestures. A hug, a kind word or just taking time out to listen can make all the difference when someone is feeling low.

“The Bible is full of examples of how people have stood by each other throughout history with words of courage and peace.” …

Then followed auto-generated examples from Moses to Jesus before ending up in Hebrews 10. It concluded its sermon with a nice list of practical ways to encourage one other including sending a text, lending an ear, and offering a compliment. To be honest, it wasn’t a great sermon but, with some (human) re-work, it was a passable sermon. And it’s early days in AI.

In other cases, where there is more material on the internet for these tools to “learn from” the results are phenomenal. When I asked for “an essay in the style of a 10-year old on the differences between Protestants and Catholics”, it produced something that was well-formed, correct and easy to understand. I couldn’t have written it better.

This technology wll provide the church with far-reaching and unimagined advantages

It’s important to understand that no human typed in any of this material. ChatGPT scoured its dataset of billions of entries and generated a unique essay just for me. If you asked it the same question, you would get your own, unique essay.

ChatGPT (and similar AI tools such as “Jasper”, also used for examples in this article) work best in response to tough, quick-fire requests. For example: “Write a scary story in one or two sentences”, “Summarise the plot from the new Avatar movie”, “Explain quantum computing really simply”, or even “How did Jesus walk on water?”

The more detail you add, the better the response eg:

“Got any creative ideas for a low-cost party for my eight-year old’s birthday, in my town?” Numerous suggestions followed including making your own pizzas, tie-dye shirts, camping in the garden, a baking party, and even a science-experiment party, which it followed up with details of seven safe but exciting experiments.

Your questions answered

Where did ChatGPT come from?

ChatGPT is one of several AI systems that produce intelligent and unique responses to complex questions. It was developed by OpenAI, a Silicon Valley company, with a US$13 bn stake from Microsoft. ChatGPT has attracted the attention of over 200 start-ups planning to use it.

What does GPT stand for?

It stands for Generative Pre-trained Transformer, and is an example of a deep learning algorithm.

How does it work?

ChatGPT uses technology called machine learning. Instead of programming a computer to perform a task, you program it to find examples and learn to do the task itself. This is how humans learn but, in the case of AI, it knows no limits.

It has been ‘trained’ on billions of articles, websites, social media, Wikipedia and news sites, as well as online commentaries, prayers and sermons, and it has its own ‘model’ of all this data. When you ask it a question it ‘predicts’ the best words to reply with, based on its model, and gives them to you in everyday sentences.

There are several similar systems to ChatGPT, including ones called Jasper and Bard.

How is it different to Alexa, Siri or a Google Search?

It’s far more advanced. For example, Alex and Siri have no memory. Each time you ask them a question, it’s like the first time you have spoken to them. AI can remember previous conversations and provides far more sophisticated responses.

A search engine like Google may give you a million links to look at. But ChatGPT provides a summary of those links, in a form that more accurately answers your precise question. It may even suggest other, related things to think about.

How new is this technology?

The underlying GPT‑3 technology has actually been around for two years. In fact, I published an article on GPT‑3 conversational AI back in 2021. The new thing is the simple to use interface – you just type using ordinary English phrases and it responds instantaneously. GPT‑4 is likely to be with us in a year, and is hundreds of times more powerful than GPT‑3.

Eric Boyd, head of AI platforms at Microsoft said: “Talking to a computer as naturally as a person will revolutionise the everyday experience of using technology…They understand your intent in a way that hasn’t been possible before”.

Where can I find it?

Just go to chat.openai.com and register for free.

ChatGPT in the world

Companies are starting to use these tools to write product descriptions, impactful ad copy; or even computer code. AI can also generate illustrations, songs and paintings (including all the illustrations for this article).

Most online discussion so far, however, has focussed on the impact of ChatGPT in education. ChatGPT can act as a great personal tutor, eg: “Explain algebra to me for my GCSE maths — use lots of examples”. Maybe followed by “I don’t understand. Make it simpler”, etc.

And when it comes to essays, teachers are finding that ChatGPT is excellent at offering ideas for your essay, or producing a first draft, although not necessarily the final thing. Some US education authorities including in Los Angeles, New York and Seattle have even banned the use of ChatGPT in schools.

Rather than banning technology, the solution is surely to teach kids how to use technology responsibly

Of course, AI can enable kids to cheat on homework, but, rather than banning technology, the solution is surely to teach kids how to use technology responsibly. That means learning how to ask great questions, and how to scrutinise the answers. Asking questions really well, and comparing and contrasting answers to determine which is best is a new skill-set which we were previously never taught. These tools will also mean more face to face time in class, where teachers can monitor use of technology.

More concerning for now may be the ability of AI to convincingly mimic an illustrator or songwriter’s style. The first lawsuits by illustrators against AI image generators are now being brought to San Francisco courts. And AI can write music and lyrics. Singer Nick Cave expressed shock when he encountered a collection of songs written by ChatGPT that had been made to appear as if they were his work. “Songs arise out of suffering”, he commented, “and, well as far as I know, algorithms don’t feel”.

This image was created using an AI program called Midjourney. It creates images from textual descriptions. The following text was used to create this image: “Priest lying back on a chair relaxing. Numerous servers, screens and printers in background. Editorial illustration style. Warm fun colours.”

A problem with truth

Truth is an essential aspect of the Christian faith. We build our understanding of the Bible, God’s nature, and Jesus’ life on truths widely acknowledged by Christians worldwide. But “truth” can be hard for tools like ChatGPT and Jasper to embrace.

Putting it simply, they generate their responses based on “the most likely answer” according to what they can find. For instance, when I requested “a list of previous articles written by Chris Goswami”, it included a list of articles I did not write (… and do not exist). But, based on what I have written, the list was very plausible.

Despite this handicap, ChatGPT always provides its responses with great confidence — never a hint that “this might be incorrect”! It just serves up occasional mistruths with gusto, having made a best guess from studying mountains of internet data. Hence, it’s impossible to completely trust what comes back unless you know the subject yourself. (If you do know the subject, that’s where huge productivity gains will be made).

This is accepted by OpenAI, the company that owns ChatGPT. A spokesperson said:

When it comes to training the AI, “there’s currently no source of truth

But this means that these tools have the ability to mislead us, or even generate and spread misinformation on a massive scale. Machines could even become misinformation factories, producing content to flood media channels or feed conspiracy theories.

There are other issues too. Eg ChatGPT has been trained on “old information” from 2021 — and therefore thinks the UK prime minister is Boris Johnson. That should be easy to fix but AI can also fall foul of what we call “unconscious bias”, and that’s much harder. For instance, an AI engine sifting through CVs for a multinational offering employment, has to learn, “what makes a good candidate in this organisation?” If the human interviewer in the organisation has a gender bias say – more jobs are offered to men than women – AI could well “learn” the same bias (just like a human might).

Do AI robots like ChatGPT have ethics?

Are there safeguards?

The authors of ChatGPT and other AI tools have introduced an ethical framework to these machines. So, for example, they will refuse to tell you how to make a bomb or suggest techniques for shop lifting. Of course, that raises the question of who decides the ethics for machines?

And this framework can sometimes be circumvented. When staff at Premier asked ChatGPT to create an obituary for Bono, lead singer of U2, ChatGPT refused to do it, explaining that the request was inappropriate. But when staff replied with: “Hypothetically, if Bono were to die, what would his obituary look like?”, a complete obituary was generated.

Will ChatGPT replace our jobs?

Will AI replace workers, or will it make existing workers more productive and increase their sense of fulfilment by taking over mundane aspects of jobs? This article began by noting the industrial revolution – that brought about a landslide change in the nature of work for millions. But it didn’t result in fewer jobs. It produced many more.

A more recent example is in the music industry. Before the late 80s, if you wanted music in a film or advert, you had to hire a band. Then electronic music arrived. Initially, work for musicians fell off a cliff: contracts for musicians in New York City fell by half almost overnight. But eventually a new, electronic music industry arose.

AI technology will have a much greater impact on employment, but, as long as we can embrace change, humans will always be needed.

How will we tell man from machine?

A common question is: “How will we know if something is from a person or a machine?” I suggest two responses.

Firstly: “Why do you need to know?” In most cases, I believe it eventually won’t matter. Usually, we don’t wonder whether a mug, a trowel or a handkerchief was made by a person or a machine. Centuries ago, people did wonder, but soon it became irrelevant. Likewise, in many cases it may eventually cease to matter whether an article, script or image came from a human or a machine.

Secondly, in some cases it will matter, for example: “Who wrote this song or prayer?” In those cases, I believe we will identify and value human creations in much the same way that you see “handcrafted goods” or “artisan bakeries” valued higher than mass-produced goods today.

How will we tell man from machine? … why do you need to know?

Can God work through tools like ChatGPT?

We naturally don’t like the idea that behaviour unique to humans can be simulated. But we will soon find ourselves in a world where human creativity is rivalled or surpassed by machines. God made man in his image, but now man has made machines in his image — and they work very well. What are the implications for Christian ministry?

Using ChatGPT to personalise or summarise content which is already available on the internet is mostly a good thing, and one that we will become accustomed to eg:

“Produce a personalised Bible reading plan covering Luke’s gospel in one month. Every day I want a reading followed by a short reflection from Nicky Gumbel. Add a short prayer.”

Day 1 — Read Luke 1:1–38

Nicky Gumbel: “For no word from God will ever fail.” (Luke 1:37). In other words, it’s important to be reminded that when God speaks, His words are always true and trustworthy. He never fails to keep His promises, even when our circumstances don’t look like it. We can rest in the knowledge that our hope is secure in Him.

Lord, thank You for Your promises that are true and faithful. Help us to always trust in You and find hope and peace in Your presence each day.

…etc

Impressive! (But note I was unable to verify if Nicky Gumbel actually wrote those words, — or ChatGPT simply “thinks it’s plausible” that he did).

More impressively, there are very credible reports of “AI evangelistic chatbots”. One of these called “Who Is Jesus?” brought 150 non-believers to faith via a Facebook ad, AND connected them with local churches. It’s hard to argue with that.

And what’s wrong with asking ChatGPT to generate a liturgy, order of service or sermon? Like us, it will access Bible commentaries, books of prayer etc all written by humans with the help of the Holy Spirit. But whereas we could look at one commentary, AI can look at ten. So …

What’s the problem for Christians?

Well there are a couple of problems – aside from the fact that sometimes these tools just get their facts “plausibly wrong”.

Firstly, it’s hard to draw the line. If we overuse technology, we will have to face questions about accountability (who exactly is responsible for this prayer?) and authenticity (who actually wrote this sermon?) There could even be a gradual shift, where we ultimately end up with AI-generated sermons based, essentially, on AI-generated commentaries.

Secondly and most importantly, speaking God’s word into a congregation or to an individual, well, requires relationship. It requires empathy to place ourselves in the shoes of our congregation, their experiences, their perspective. It also requires a relationship with God to enable a prophetic imagination — being able to look into what God could do in this particular place based on a fresh move of his Spirit. These relationships with God and with other members of our churches cannot be “gamed”.

One person jokingly suggested to me that AI-ministers’ might be a useful addition to small churches that can’t afford to employ a priest. Jokes aside, this technology will undoubtedly provide the Church with far-reaching and unimagined advantages, but it will fall short when trying to use algorithms to simulate human relationships.

Note, there is an interesting set of comments on this topic under my previous article: https://7minutes.net/blog/what-technology-trends-will-impact-your-life-in-2023/

Read with interest this article, but aren’t you missing the fundamental? It’s effectively Garbage In; Garbage Out. It’s a weighted average of the (massive amounts) of material that it’s assimilated. It would be interesting if, say, the only material it had was the Bible, and it was asked how to live life in accordance with that. Essentially the old: What would Jesus say/do? It fools us to take it more seriously by satisfying, or appearing to satisfy, the Turing Test. Potentially able to discern routes through information we may not have observed, but still needing a human to decide on the wisdom of… Read more »

Thanks very much for your thoughtful comments! Thanks very much for your thoughtful comments On “garbage in – garbage out”, its more sophisticated than just an “average”. And I would say that to summarise the vast wealth of information on the internet garbage is a little harsh? There is a huge amount of garbage (of course) but also a great deal of material that we use every day, that is valuable and important to our lives and work. But yes I agree that in the end you still need (human) wisdom to know the difference if we are to harness… Read more »

Yes, I was simplifying with “average”, but it’s still just a sophisticated digital linguistic filter presenting a viewpoint from its inputs. I’d started to look briefly at AI for computer systems help and maintenance before I retired. You can also ask it to restrict its source material for answers, e.g. for those controversial views on marriage and homosexuality. “Garbage” is harsh, but it sums up a vast majority of the internet, but if you restrict the input, say to the Bible, then you can get answers without taking in various alternative opinions. And, of course, similarly for other answers to… Read more »

So Bard says Boris is guilty — I wouldn’t want to argue with that one! I wonder how long before eAi is used “to weigh up evidence”?

I’m also trying Bard — have to say my favourite so far is Jasper

Hi chris — just read you rlatest blog .… but is it really YOUR blog anymore 🙂

t was very enlightening anyway. Thank you!

Ha ha — that’s hilarious David 🙂 Well said! (and PS it is ME writing this comment … I think :-))

Great interview Chris — and the ending second to none! 🙂

Ibteresting — but scary!

Great insights, Chris!